Unlearning Language

—Lauren Lee McCarthy

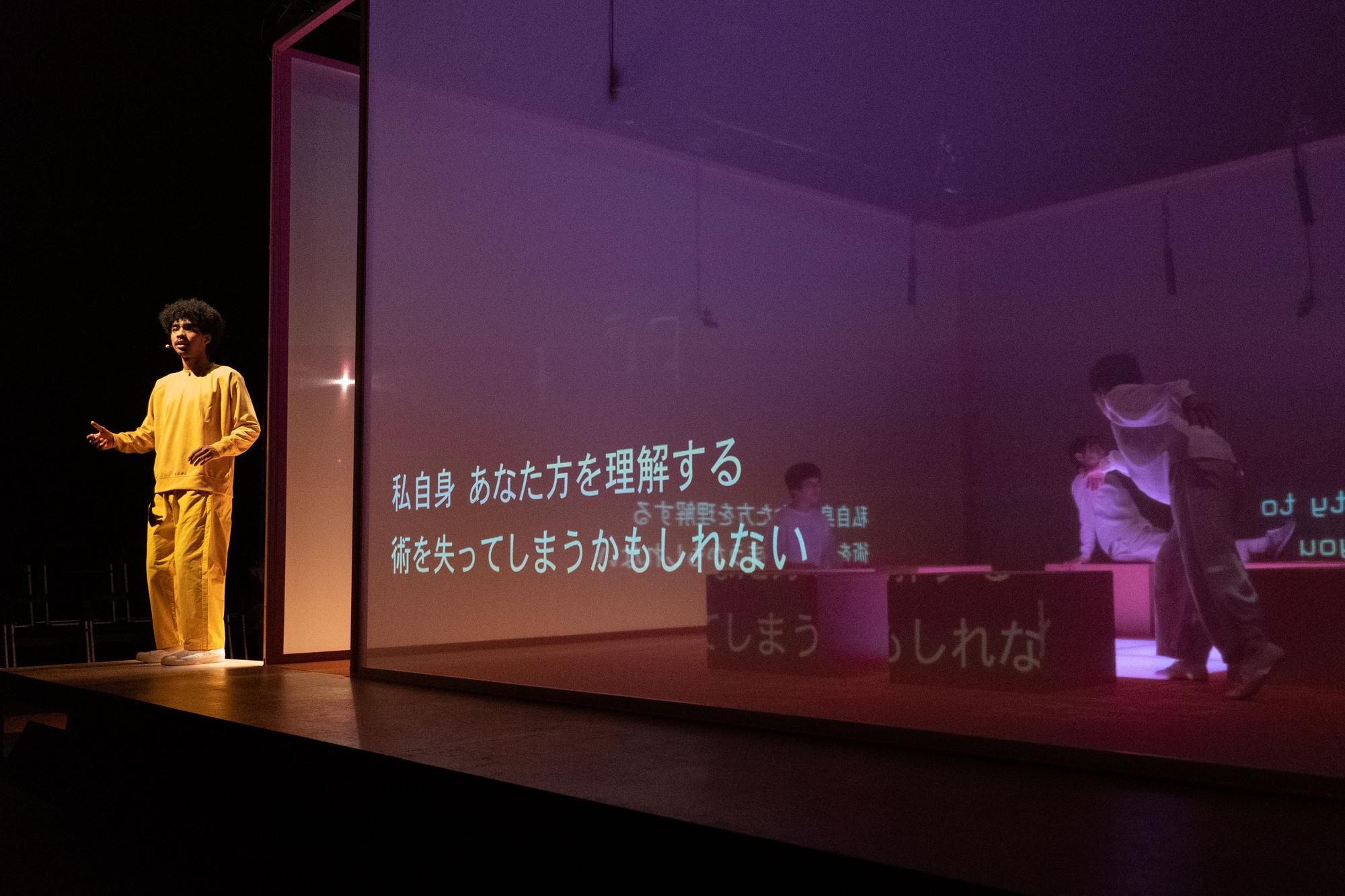

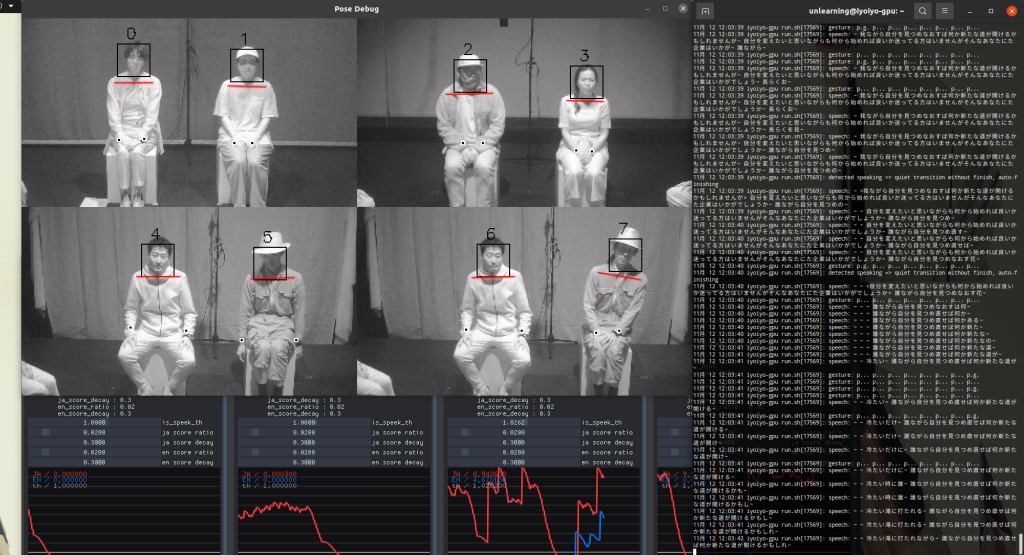

The project unfolds in a space where technology is at once the enabler and the instigator. Utilizing custom software built with TouchDesigner, Python, Node.js, and GPT-3, the installation employs advanced machine learning techniques for real-time speech, gesture, and expression detection. As participants interact, the AI “listens” and intervenes, shifting the environment’s light, sound, and even vibration. These shifts prompt the participants to modify their forms of expression—to clap, hum, or alter their speech patterns—in a playful effort to find modes of communication that evade machine comprehension. This unique setup raises questions about the essence of language and offers a vision of communication that machines cannot predict or decode.

Unlearning Language is particularly timely in a world where human language is increasingly processed, stored, and manipulated by artificial intelligence. As McCarthy and McDonald have noted in their previous work, they are interested in creating art that asks: what might a world look like where human communication thrives outside of machine detection? Inspired by essays and critical texts questioning the neutrality of AI, the project aligns with the growing discourse on digital surveillance and the homogenizing influence of algorithmic prediction. Through its carefully calibrated interactions, Unlearning Language enables participants to experience the fragility of authentic, unrecorded human expression.

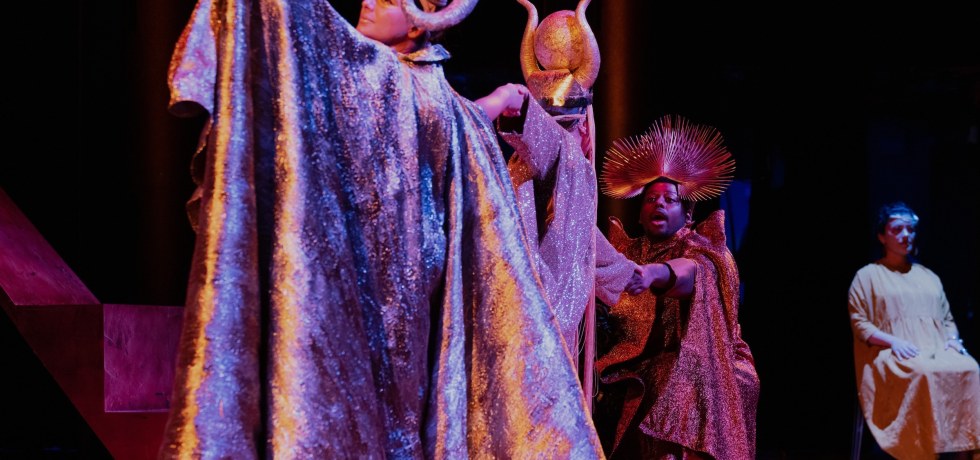

At once immersive and thought-provoking, Unlearning Language creates a space where participants can rediscover the richness of human language as a force for creativity, unpredictability, and intimacy. By encouraging the use of rhythms, pitches, and gestures that feel organic and undetectable, the installation pushes back against a future where communication may be constantly observed and recorded. McCarthy and McDonald’s installation stands as a call to imagine, and perhaps preserve, the deeply nuanced aspects of communication that make us human. It advocates for a future in which technology serves rather than defines human connection.

Lauren Lee McCarthy is an artist examining social relationships in the midst of surveillance, automation, and algorithmic living. She has received grants and residencies from Creative Capital, United States Artists, LACMA Art+Tech Lab, Sundance, Eyebeam, Pioneer Works, Autodesk, and Ars Electronica. Her work SOMEONE (2017) was awarded the Ars Electronica Golden Nica and the Japan Media Arts Social Impact Award, and her work LAUREN (2017) was given the IDFA DocLab Award for Immersive Non-Fiction. Lauren’s work has been exhibited internationally at such places as the Barbican Centre in London, Haus der elektronischen Künste in Basel, the Onassis Cultural Center in Athens, IDFA DocLab in Amsterdam, Science Gallery Dublin, and the Seoul Museum of Art.

Lauren Lee McCarthy is an artist examining social relationships in the midst of surveillance, automation, and algorithmic living. She has received grants and residencies from Creative Capital, United States Artists, LACMA Art+Tech Lab, Sundance, Eyebeam, Pioneer Works, Autodesk, and Ars Electronica. Her work SOMEONE (2017) was awarded the Ars Electronica Golden Nica and the Japan Media Arts Social Impact Award, and her work LAUREN (2017) was given the IDFA DocLab Award for Immersive Non-Fiction. Lauren’s work has been exhibited internationally at such places as the Barbican Centre in London, Haus der elektronischen Künste in Basel, the Onassis Cultural Center in Athens, IDFA DocLab in Amsterdam, Science Gallery Dublin, and the Seoul Museum of Art.

An associate professor at UCLA Design Media Arts, Lauren holds an MFA from UCLA and a BS in Computer Science and a BS in Art and Design from MIT. She is the creator of p5.js, an open-source art and education platform that prioritizes access and diversity in learning to code. She expands on this work in her role on the Board of Directors for the Processing Foundation, whose mission is to serve those who have historically not had access to the fields of technology, code, and art in learning software and visual literacy.

Kyle McDonald is an artist who works with code. He is a contributor to open-source arts-engineering toolkits like openFrameworks, building tools that allow an artist to use new algorithms creatively. He subverts network communication and computation, explores glitches and systemic bias, and extends these concepts to the reversal of everything from identity to relationship. He frequently leads workshops exploring computing vision and interaction. Previously an adjunct professor at NYU’s Interactive Telecommunications Program, he is member of the Free Art and Technology Lab and community manager for openFrameworks. His work has been commissioned and exhibited worldwide, including at the NTT InterCommunication Center in Tokyo, Ars Electronica in Austria, Sonar/OFFF, Eyebeam in New York, the Anyang Public Art Project in South Korea, and the Cinekid Festival in Amsterdam.

Kyle McDonald is an artist who works with code. He is a contributor to open-source arts-engineering toolkits like openFrameworks, building tools that allow an artist to use new algorithms creatively. He subverts network communication and computation, explores glitches and systemic bias, and extends these concepts to the reversal of everything from identity to relationship. He frequently leads workshops exploring computing vision and interaction. Previously an adjunct professor at NYU’s Interactive Telecommunications Program, he is member of the Free Art and Technology Lab and community manager for openFrameworks. His work has been commissioned and exhibited worldwide, including at the NTT InterCommunication Center in Tokyo, Ars Electronica in Austria, Sonar/OFFF, Eyebeam in New York, the Anyang Public Art Project in South Korea, and the Cinekid Festival in Amsterdam.

McDonald previously stayed at YCAM for the Guest Research Project vol.1, ProCamToolkit (2011) and Reactor for Awareness in Motion (RAM) (2012-2013). The works he has exhibited include I Eat Beats (2011) and Daito Manabe + Kyle Mcdonald + Zachary Lieberman + Theodore Watson’s The Janus Machine (2011).

YCAM, the Yamaguchi Center for Arts and Media, is an art center in Yamaguchi, a city of almost 200,000 people at the far western end of Japan’s main island of Honshu, 930 kilometers (578 miles) west of Tokyo. Since its opening in 2003 in a building designed by the Pritzker Prize-winning architect Arata Isozaki and his firm, YCAM has focused on producing and exhibiting original works that incorporate media technologies. YCAM InterLab, where Unlearning Language was developed, is the center’s internal research and development laboratory, staffed by about 20 resident members with skills in curation, education, engineering, and design. Unlearning Language was part of YCAM’s Sakoku [Walled Garden] Project, a three-year R&D program in which YCAM Interlab collaborates with artists and researchers to conduct workshops and create artworks on the theme of the future of information and the Internet.