Lure

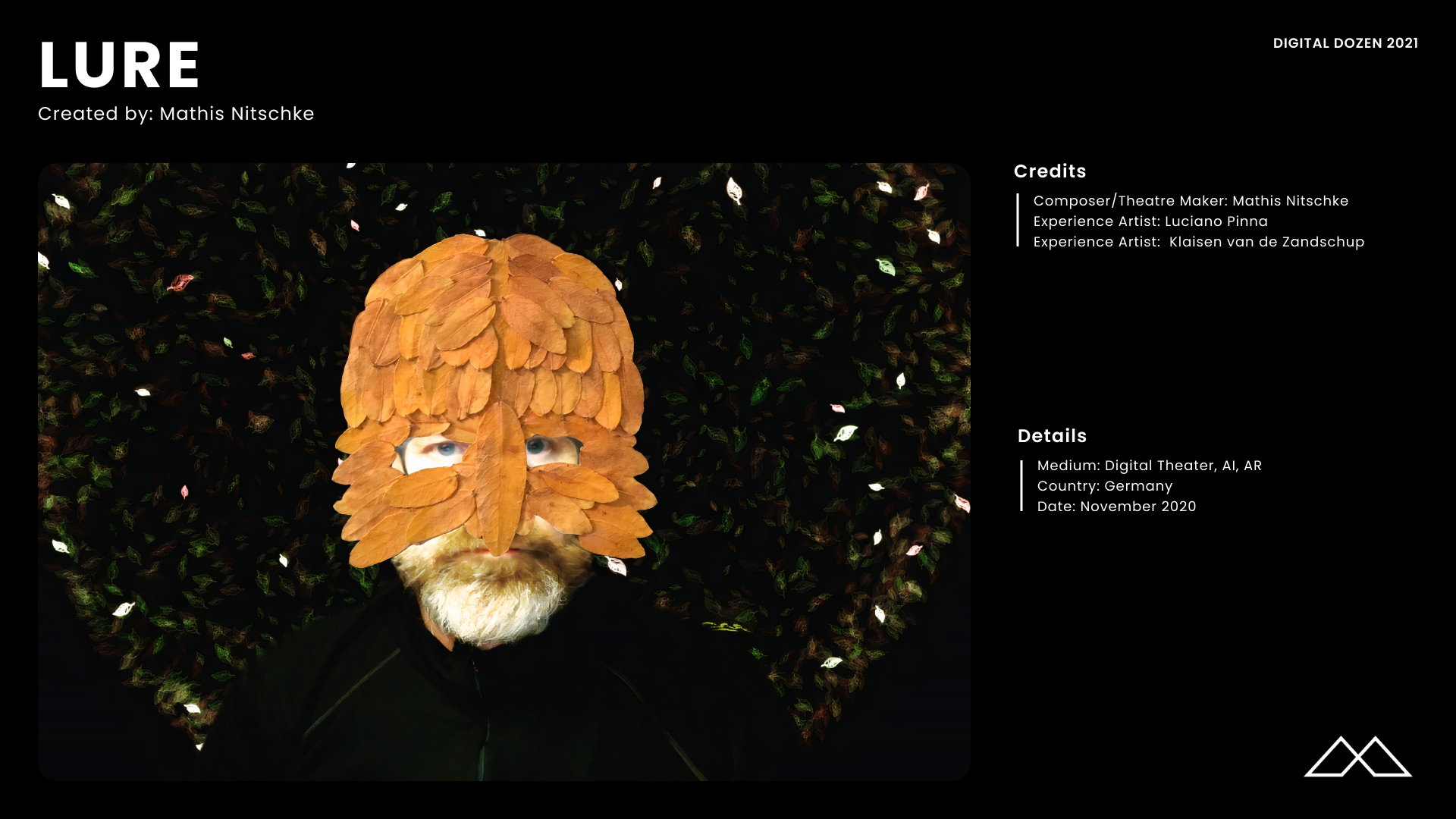

Lure is an artistic research project in machine learning in theater and music. This research transfers questions about human-machine interaction and artificial intelligence to the fields of theater and music.

The narrative is based on the classic romance Parzival, by the medieval knight-poet Wolfram von Eschenbach. Parzival meets three elegant knights, decides to seek King Arthur, and continues a spiritual and physical search for the Grail, looking for the world outside the forest in which he was raised. Lure uses the character of Parzival, who is now developed inside a computer, with a similar intentionality. It wants to learn about you and the world and starts a conversation with you. It tries to express itself by playing with a musician. And it leaves its virtual confinement to explore the world by itself.

With these three prototypes, Lure’s creators studied three different artistic and technological approaches: developing empathy with the computational character by discovering how it learns, experiencing its inner workings through theatrical expression, and being touched by its musical sensitivity. An audience was invited to an open studio event for a playful session with music, performance and collective discovery—an interactive presentation of the three prototypes and the research behind them, which though still a work-in-progress was presented in a theatrically staged online performance.

ABOUT THE CREATORS

Lure is a project of Munich composer and theatre maker Mathis Nitschke, Amsterdam interactive artists Luciano Pinna and Klasien van de Zandschulp, Munich dramaturg Elsa Büsing and scientists from the Volkswagen Group Machine-Learning Research Lab Patrick van der Smagt, Djalel Benbouzid and Nutan Chen.

Mathis Nitschke is an artist who combines the studies of classical guitar, fine arts and music composition with theater and opera as well as coding. In collaboration with artists from a wide variety of disciplines, Nitschke seeks to give contemporary music theater a new face. His work has been presented in galleries such as the Huis Marseille in Amsterdam and Storage by Hyundai Card in Seoul, in the Locarno Film Festival, in theaters such as the Münchner Kammerspiele and the Thalia Theater Hamburg, and at the Montpellier National Opera in France.

Much of Nitschke’s artistic work reflects on the processes of creating and listening to music. In his musical compositions and productions he often starts with improvised or semi-improvised performances by flesh-and-blood musicians in order to capture not only their tones but also their personality. As a film and theater composer, he has collaborated with institutions such as the Munich Philharmonic and ECM Records. He is also the founder of Sofilab , a Munich-based sonic design studio and innovation laboratory that functions at the interface of art, industry and technology.

Luciano Pinna is a conceptual artist, augmented reality designer and former physicist who lives and works in Amsterdam. His art work has been exhibited in Amsterdam, Berlin, London and New York.

Klasien van de Zandschulp is an Amsterdam-based interactive artist and creative director at affect lab, a creative studio and cultural insights practice in Amsterdam that aims to shift perspectives through community-based research, technology and storytelling. She designs story-based and participatory experiences, blending digital/physical and online/offline interactions. Her work explores sensory design, embodiment, rituals, augmented realities, human interaction and (radical) thoughts around our daily technology consumption.

As a dramaturge and project manager, Elsa Büsing creates, conceives and accompanies music-theatrical forms, performances in the context of digital technologies, artistic actions in public space and participative cultural formats. She also regularly oversees EU-funded international cooperation projects. In her ongoing PhD project at the University of Munich, she researches dialogicity in theater with a special focus on the theory and aesthetics of contemporary music theater; the relationship between space, location and performance; aesthetic perception, and art and digitality.

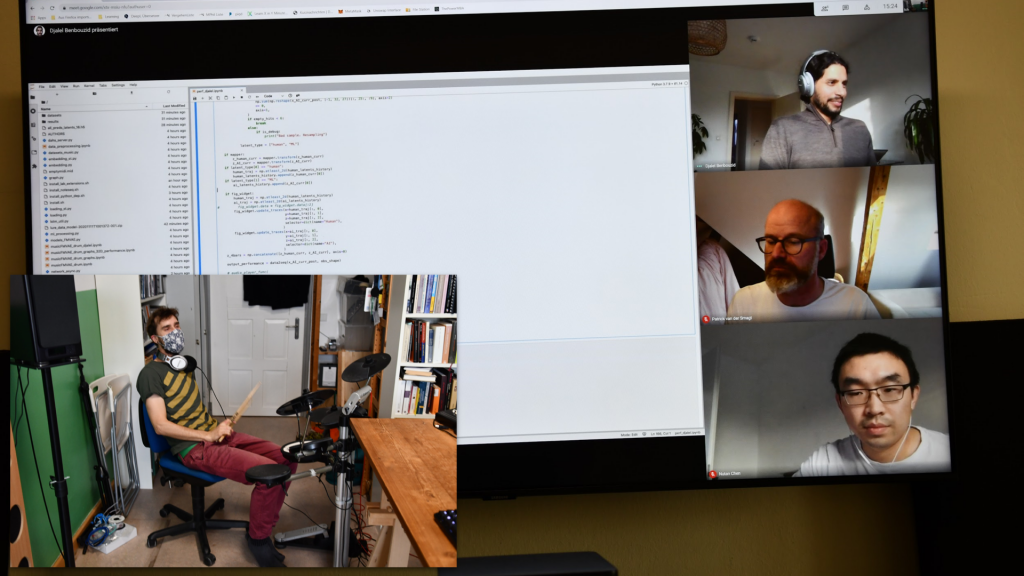

Volkswagen Group Machine Learning Research Lab, established in 2016 in Munich, is a fundamental research lab that focuses on topics close to what artificial intelligence is about: machine learning and probabilistic inference, efficient exploration and optimal control. A particular focus of its machine-learning research is creating latent-variable models with flat latent spaces, making them interpretable — work that was validated in an out-of-the-box application: drumming side-by-side with a human in Lure. This research was led by Patrick van der Smagt, director of AI research at Volkswagen Group and head of corporate policy related to trustworthy AI; Djalel Benbouzid, senior research scientist in machine learning and coordinator of a consortium on ethical and trustworthy artificial intelligence at Volkswagen Group; and Nutan Chen, AI researcher at Volkswagen’s Data:Lab Munich, which uses data to create predictive work models.

THREE QUESTIONS FOR THE CREATOR

Why this? Why now?

For some years now, I have been incorporating the digital technologies that are fundamentally changing our everyday lives and togetherness into my artistic work. For the music theatre pieces that emerge, I work as a composer, director and producer in personal union. I am supported and funded by the performing arts, music and the visual arts. As a society we fear, not without reason, that the AI will question and change the foundations of our human self-understanding. However, I also observe with concern that we humans, with our current pressure for efficiency and the functionalization of our existence, adapt more quickly to the machines than the machines adapt to us. My aim and concern is not only to process these observations discursively but to transfer the subject matter from the inner logic of the new technologies into my own musical, textual and visual poetics. When thinking about making a project with and about artificial intelligence, I quickly realized that it would not be enough to “ask” an AI to write a poem or a piece of music, but that we wanted to have the AI itself as a protagonist on stage, as a counterpart to us “natural intelligences.”

What were you surprised to learn as you were making it?

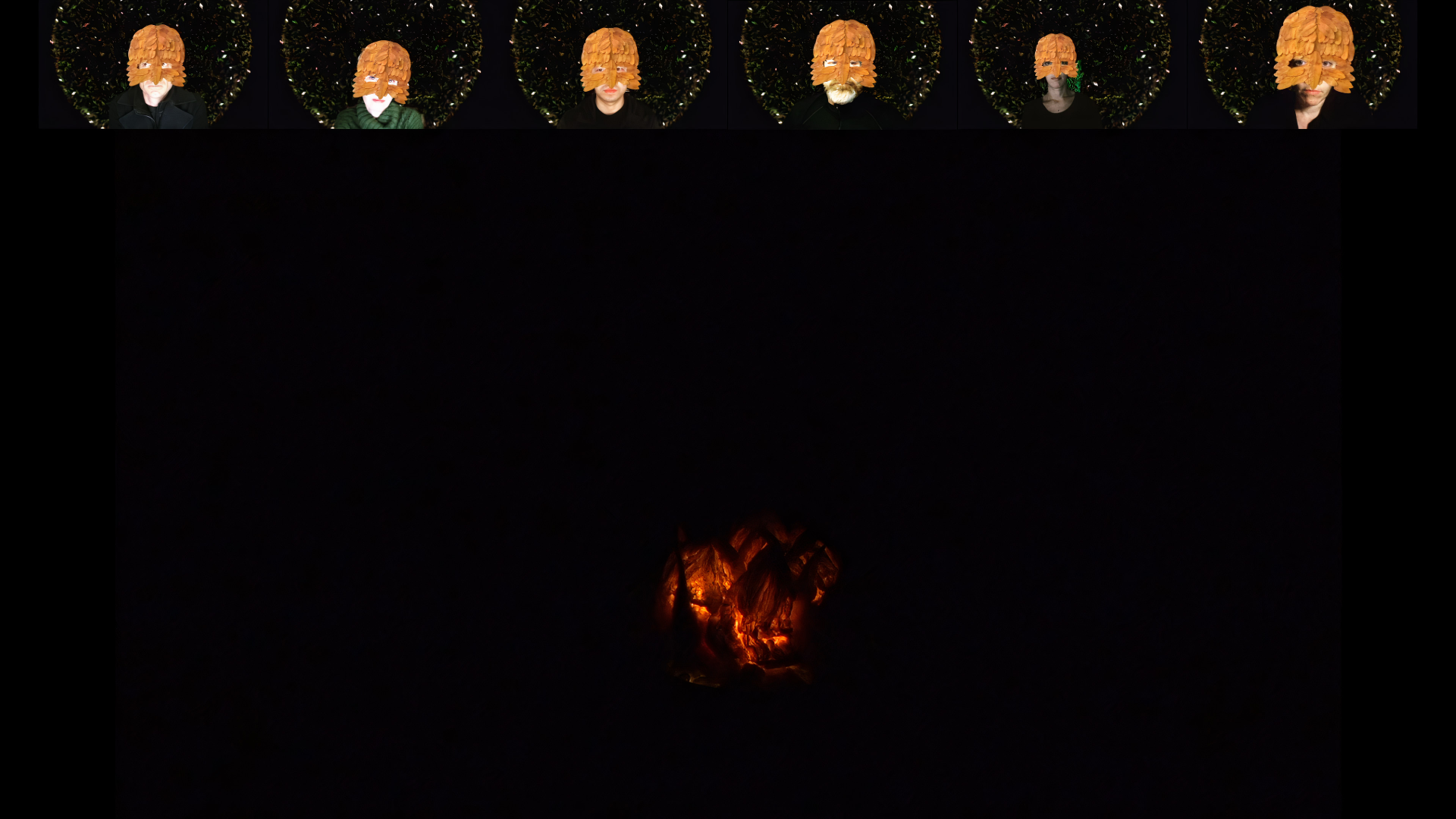

Originally meant to happen in the physical space of Munich Pathos theatre, this project became also a prototype for a theatrical staging in digital space, due to the coronavirus. Usually a theatrical event relies on many people experiencing the same sensations in the same space. We took the digital meeting space most people know very well by now, Zoom, as the shared space in which our theatrical sensations should happen. We transformed Zoom into theatre by using AR masks (Snap camera) and a special background. The performers and participants gathered around the fire, just like humans did way back in ancient times when they invented storytelling. This fire was present throughout the whole performance and served as a fixed reference. Although the project wasn’t actually conceived as an experiment in interactive online theatre, it became a very interesting prototype for us and one we definitely want to explore more.

What was the most challenging aspect for you?

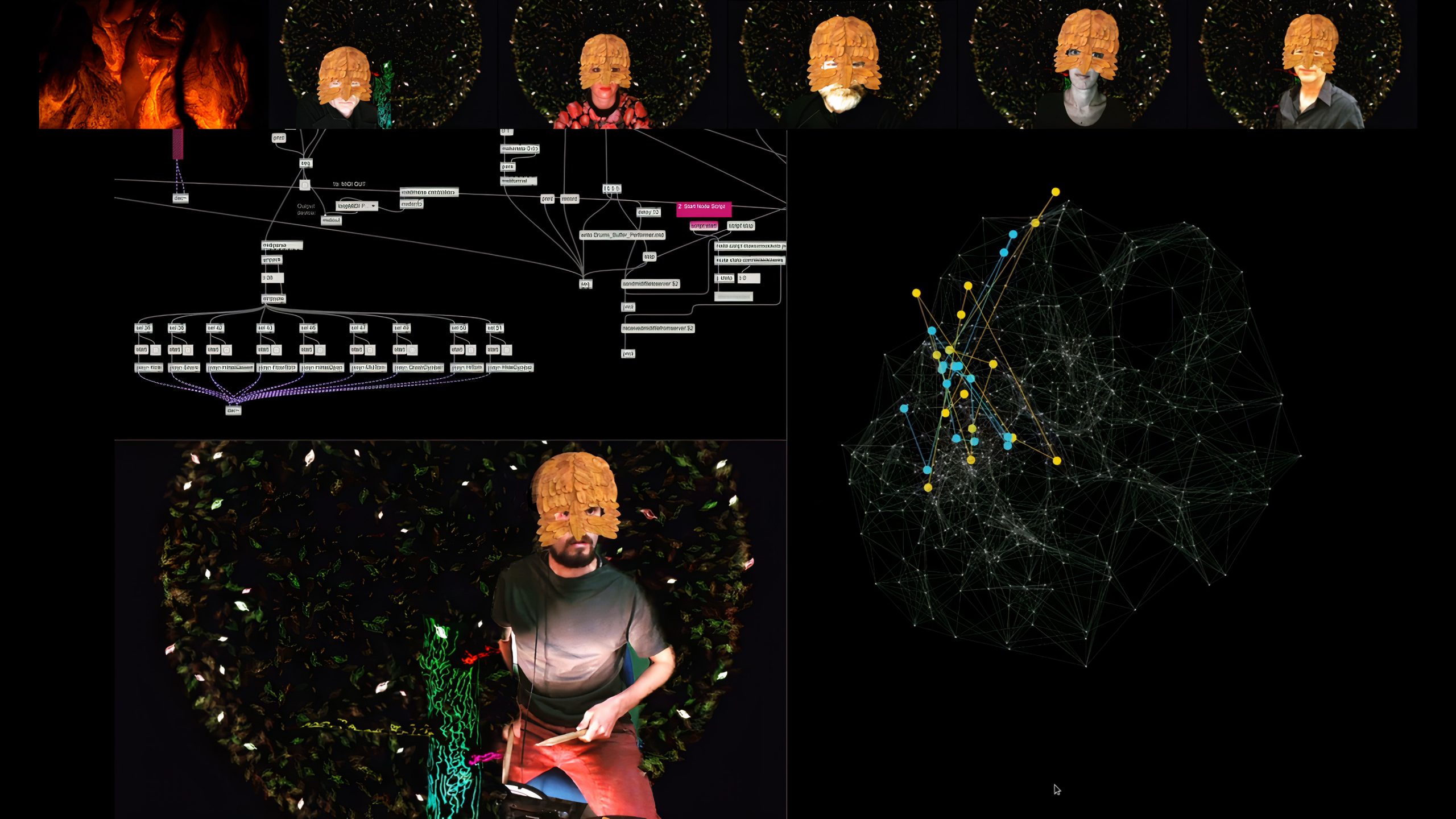

We really wanted to deliver interesting and innovative technology, too, not just make an interesting and compelling art project. One goal was to research if a musician could perform live with an intelligent machine and create an improvised musical composition while playing together. One prototype was developed together with the Volkswagen Group Machine-Learning Research Lab, based on their cutting-edge AI research on recurrent neural networks and unsupervised learning. From our research we concluded that it was best to start with developing the prototype using a drummer as musician and a model trained on Google’s Magenta Groove Dataset.

For the model we compressed 32 x 27 dimensional groove data into a 32 dimensional latent space using a flat-manifold variational autoencoder with recurrent networks for the encoder and decoder. The model flattens the latent space, so that the mapping between the latent space and the MIDI data is interpretable. Based on the operating history of the drummer during the performance, the model extrapolates and generates the MIDI data from the latent space for the next time step. To connect the human drummer up with the AI we used a MIDI drumkit that captured the hits played. These were processed via a custom-built Max patch and then sent over to the AI via our custom-built realtime server. The answer from the AI was sent back to Max and audibly played for the drummer to react to. In order to overcome the latency between the musicians and the processing by the various systems we opted for a 2 bars question-response setup. So the human would play and the AI’s answer would be exactly 2 bars later. This form (also known as a drum battle) created an interesting dynamic between drummer and AI in which the human drummer was both playing with the AI drummer and trying to play against it.